Ontology Intrinsic Completeness ________________________________________________________________________________________

Ph. A. Martin [0000-0002-6793-8760] and, for the sections 3 and 5.2 to 5.4, O. Corby [0000-0001-6610-0969]

Comparison with a previous article.

This article strongly extends some ideas of a

previous article (focused on the notion of

“comparability between objects”) by focusing on the more general notion of

ontology completeness: the absolute or relative number of objects (in the evaluated KB)

that comply to a specification (of particular relations that should exist between

particular objects) given by a user if the evaluated KB.

Indeed, the way it was defined in the previous article, comparability can retrospectively be seen

was a specialization of completeness: this was convenient for the previous article but not generic

enough for evaluating an ontology completeness. More precisely, in this previous article,

comparability between objects is defined as

i) checking that identity/equivalence relations or negations of them exist between these

objects (this step is not mandatory with ontology completeness), and then

ii) checking a particular completeness of objects wrt. a given set of particular (kinds of)

relations (the present article introduces a model that supports the specification and checking

of more subkinds of completeness).

Abstract for Section 2 (“Generic Specification Model”; this part

is to be converted into an article;

the sentences giving additional information that is not in this other article

are in “olive” color; + in October 2022:

comments or texts from OC are in this blue,

my first texts/comments in answer to them is in this red

(or green) and my

my last texts/comments to them is in this orange.

In the field of ontology quality evaluation, a classic general definition of the completeness of

an ontology – or, more generally, a knowledge base (KB) – is:

the degree to which information required to satisfy a specification are present in this KB.

A restriction of this general definition is often adopted

in the domain of information systems ontologies: the ontological contents must

be exhaustive with respect to the domain that the ontology aims to represent.

Thus, most current completeness measures are metrics about

relatively how many objects from a reference KB (an idealized one or another existing KB)

are represented in the evaluated KB.

In this article, they are called “extrinsic completeness” measures since they

compare the evaluated KB to an idealized one or another existing KB, hence an external KB.

Instead, this article focuses on “intrinsic (ontology/KB) completeness”:

how many – or how relatively many – objects in the evaluated KB comply to

a specification about particular relations that should exist from/to/between particular objects

in this KB. Such specifications are typically KB design recommendations: ontology design patterns,

KB design best practices, rules from methodologies, etc.

These particular KB design recommendations do not refer to a reference KB.

There are many KB checking tools or knowledge acquisition tools that implement the checking of

some particular design recommendations. This article goes further: it proposes

a generic model (an ontology of intrinsic completeness notions) and a tool exploiting it which

i) enable their users to formally specify their own intrinsic completeness measures, and then

ii) enable the automatic checking of the compliance of a KB to these measures.

The first point thus also supports the formal definition and extension of existing KB design

recommendations and the specification of KB quality measures not usually categorized as

completeness measures.

The genericity of the cited model and the automatic checkability of specifications based on this

model are based on

i) some specializations of a generic function with knowledge representations (KRs) or other

functions as parameters,

ii) the exploitation of the implication-related feature of any inference engine selected by

the user for parsing and exploiting these above cited parameters, and

iii) the idea that the KB must explicitly specify whether the relations from the

specifications are true (or false) or in which context they are true (or false), e.g., when,

where, according to whom, etc.

To sum up, these last three points answer several research questions that can be merged into

the following one: how to support KB users (or KB design recommendations authors) in defining

intrinsic completeness measures that are automatically checkable whichever the used formalism and

inference engine? One advantage is to extend the KB quality evaluations that someone can perform,

Then, since the result can point to the detected missing relations, this result is also useful

for knowledge acquisition purposes, e.g.

for increasing the inferences that the KB supports

or for representing relations to support the FAIR principles (Findability,

Accessibility, Interoperability, and Reuse of digital assets).

As illustrations of experimental implementations and validations of this approach, this article also

shows i) an implemented interface displaying interesting types of relations and

parameters to use for checking intrinsic completeness, and

ii) some results of the evaluation of some well known foundational ontologies.

Keywords: Ontology completeness ⋅ KB quality evaluation ⋅ Ontology design patterns ⋅ Knowledge organization ⋅ OWL ⋅ SPARQL

Table of Contents

1. Introduction 2. General Approach: An Ontology-based Genericity Wrt. Goals, Formalisms and Inference Engines 2.1. The Function C*, its Kinds of Parameters and Specializations; Terminology and Conventions 2.2. Some Examples and Definitions of Existential Or Universal Completenesses 2.3. Genericity Wrt. Formalisms and Inference Engines 2.4. Some Advantages of Universal Completeness (and of Existential Completeness) 2.5. Comparison of the General Approach With Some Other Approaches or Works 2.6. Overview of Important Kinds of Parameter Values Via a Simple User Interface 2.7. Ontology of Operators, Common Criteria or Best Practices Related to Intrinsic Completeness 2.8. Evaluation of the General Approach Wrt. Subtype Or Exclusion Relations In Some Foundational Ontologies 3. Implementations Via a SPARQL Engine Exploiting an OWL Inference Engine 3.1. Exploiting OWL, SPARQL and SHACL For Checking Or Stating Relations Between Types 3.1.1. Using SPARQL Queries To Check Some OWL-RL/QL Relations Between Types 3.1.2. Types To State Or Check That Particular Types Are Related By Subtype Or Equivalence Relations, Or Cannot Be So 3.1.3. Checking Classes Via SHACL 4. Ontology and Implementations of Notions Useful For the 3rd Parameter 4.1. Exploitation of All Relations Satisfying Particular Criteria 4.2. Object Selection Wrt. Quantifiers and Modalities 4.3. Minimal Differentia Between Particular Objects 4.4. Constraints on Each Shared Genus Between Particular Objects 4.5. Synthesis of Comparisons With Other Works 5. Ontology and Exploitation of Relation Types Useful For the 2nd Parameter 5.1. Generic Relations For Generalizations Or Implications, and Their Negations, Hence For Inference Maximization 5.2. Interest of Checking Implication and Generalization Relations 5.2.1. Examples of Inconsistencies Detected Via SubtypeOf Relations and Negations For Them 5.2.2. Reducing Implicit Redundancies Between Types By Systematically Using SubtypeOf or Equivalence Relations (and Negations For Them) 5.2.3. Increasing Knowledge Querying Possibilities 5.2.4. Exploitation of Implication and Exclusion Relations Between Non-Type Objects 5.3. Exploitation of “Definition Element” Relations and Their Exclusions 5.3.1. Definition of "Definition Element" 5.3.2. Avoiding All Implicit Redundancies and Reaching Completeness Wrt. All “Defined Relations” 5.3.3. Finding and Avoiding Most Implicit Redundancies 5.4. Exploitation of Some Other Transitive Relations and Their Exclusions 5.5. Exploitation of Type Relations and Their Exclusions 5.6. Synthesis of Comparisons With Other Works 6. Conclusion 7. References

1. Introduction

KB quality. As detailed in [Zaveri et al., 2016], a survey on quality assessment for Linked Data, evaluating the quality of an ontology – or, more generally, a knowledge base (KB) and even more generally, a dataset – often involves evaluating various dimensions such as i) those about the accessibility of the dataset (e.g. those typically called Availability, Licensing, Interlinking, Security, Performance), and ii) other dimensions such as those typically called Interoperability, Understandability, Semantic accuracy, Conciseness and Completeness.

Dataset completeness. As noted in [Zaveri et al., 2016], dataset completeness commonly refers to a degree to which the “information required to satisfy some given criteria or query” are present in the considered dataset. Seen as a set of information objects, a KB is a dataset (in [Zaveri et al., 2016] too). The KB objects, alias resources, are either types or non-type objects. These last ones are either statements or individuals. A statement is an asserted non-empty set of relations. In the terminology associated to the RDF(S) model [w3C, 2014a], relations are binary, often loosely referred to as “properties” and more precisely as “property instances”.

Extrinsic (dataset) completeness. A restriction of the previous general definition for ontology completeness is often adopted in the domain of information systems ontologies: “the ontological contents must be exhaustive with respect to the domain that the ontology aims to represent” [Tambassi, 2021]. In KB quality assessment surveys referring to the completeness of an ontology or KB, e.g. [Raad & Cruz, 2015] and [Zaveri et al., 2016] and [Wilson et al., 2022], this notion measures whether “the domain of interest is appropriately covered” and this measure involves a comparison to existing or idealized reference KBs or to expected results when using such “external” KBs – hence, this article calls this notion “extrinsic model based completeness”. E.g., completeness oracles [Galárraga & Razniewski, 2017], i.e. rules or queries estimating the information missing in the KB for answering a given query correctly, refer to an idealized KB. [Raad & Cruz, 2015] distinguishes “gold standard-based”, “corpus-based” and “task-based” approaches. [Zaveri et al., 2016] refers to schema/property/population completeness, and almost all metrics it gives for them are about relatively how many objects from a reference dataset are represented in the evaluated dataset.

Intrinsic (KB) completeness. This article gives a generic model allowing the specification of measures for the “intrinsic completeness” notion(s), not the extrinsic one(s). For now, these measures can be introduced as follows: each of them is a metric about how many objects – or relatively how many objects – in a given set comply to a semantic specification, i.e. one that specifies particular (kinds of) semantic relations that each evaluated object should be source or destination of. E.g., each class should have an informal definition and be connected to at least one other class by either a subclass/subclassof relation or an exclusion relation. As detailed in the next paragraph, such specifications can typically be KB design recommendations: ontology design patterns, KB design best practices, rules from methodologies, etc. These particular KB design recommendations do not refer to a reference KB or to the real world. Thus, this notion is not similar to the “ontology completeness” of [Grüuninger & Fox, 1995] where four “completeness theorems” define whether a KB is complete wrt. a specification stated in first-order logic. In [Zaveri et al., 2016] and [Wilson et al., 2022], the word “intrinsic” instead means “independent of the context of use” and the intrinsic completeness of this article is i) not referred to in [Zaveri et al., 2016], and ii) referred to via the words “coverage” (non-contextual domain-related completeness) or “ontology compliance” (non-contextual structure-related completeness) in [Wilson et al., 2022].

Purposes. Unlike extrinsic model based completeness, intrinsic completeness is adapted for evaluating the degree to which a given set of objects complies with KB design recommendations, such as particular ontology patterns [Presutti & Gangemi, 2008] [Dodds & Davis, 2012] (and the avoidance of anti-patterns [Ruy et al., 2017] [Roussey et al., 2007]), best practices [Mendes et al., 2012] [Farias et al., 2017] or methodologies (e.g. Methontology, Diligent, NeOn and Moddals [Cuenca et al., 2020]). Such an evaluation eases the difficult task of selecting or creating better KBs for knowledge sharing, retrieval, comparison or inference purposes.

Need for a generic specification model. Many KB evaluation measures can be viewed as intrinsic completeness measures for particular relation types. Many checks performed by ontology checking tools also evaluate particular cases of intrinsic completeness, e.g. OntoCheck [Schober et al., 2012], Oops! [Poveda-Villalón et al., 2014], Ontometrics [Reiz et al., 2020], Delta [Kehagias et al., 2021], and OntoSeer [Bhattacharyya & Mutharaju, 2022] (this last one and OntoCheck have been implemented as plug-ins for the ontology editor Protégé). However, it seems that no previous research has provided a generic way to specify intrinsic completeness measures (in an executable way) and thence enable their categorization and application-dependent generalizations (executable non-predefined ones), whichever the evaluated kinds of relations. It is then also difficult to realize that many existing KB evaluation criteria or methods are particular cases of a same generic one.

Related research questions. In addition to this genericity issue, some research questions – which are related and apparently original – are then: i) how to define the notion(s) of intrinsic completeness, more precisely than above, not only in a generic way but also one that is automatically checkable, ii) how to extend KB design recommendations and represent knowledge for supporting an automatic checking of the use of particular relations while still allowing knowledge providers to sometimes disagree with such a use (this for example rules out checking that a particular relation is asserted whenever its signature allows such an assertion), and iii) how to specify intrinsic completeness for the increase or maximization of the entering of particular relations by knowledge providers and then of inferences from these relations (e.g., especially useful relations such as subtype and exclusion relations, or type relations to useful meta-classes such as those of the OntoClean methodology [Guarino & Welty, 2009])? When the representations have to be precise or reusable – as is for example the case in foundational or top-level ontologies – these questions are important.

Use of generic functions; insufficiencies of constraints and axioms. To answer these research questions, this article introduces an ontology-based approach which is generic with respect to KRLs, inference engines and application domains or goals. The starting point of this approach is the use of C*, one possible polymorphic function theoretically usable for checking any of the intrinsic completenes notions described in this article. Any particular set of parameters of C* specifies one particular intrinsic completeness check. For practical uses, restrictions of C* are also defined, e.g. CN (which returns the number of specified kinds of objects in the specified KB) and C% (which returns the percentage of specified kinds of objects in the specified KB). Descriptions about C* are also about its restrictions. From now on, the word “specification” refers to an intrinsic completeness specification for a KB, typically via C% since it allows the checking of a 100% compliance. Checking a KB via a function like C% – or a query performing this particular check – is not like adding an axiom or a logic-based constraint to ensure that the KB complies to it. Indeed, axioms and classic kinds of constraints do not give percentages or other results and, since they generally have to be written within a KB, they are generally not usable for comparing KBs without copying or modifying them. Using functions (such as C% or within a constraint) also has advantages for clarity, concision and modularity purposes, as well as for lowering expressiveness requirements. One reason is that a function can encapsulate many instructions, sub-functions or rules, and can support default parameters. Second, a function allows the components of a specification to be distributed onto different parameters. Thus, the KRL or constraint-language required for writing the parameters does not have to be as expressive as the one that would be required for writing an axiom or a constraint instead of the function call. Furthermore, identifying these parameters and useful values for them is a first step for creating an ontology of (elements for) intrinsic completeness. As later illustrated, several useful kinds of such elements would require a second-order logic notation to be used in axioms or constraints, i.e., without the kinds of functions or special types described in this article. Most KRLs and constraint languages do not have such an expressiveness. As another example, except in particular cases, SHACL-core [w3c, 2017] cannot (but SHACL-SPARQL can) specify that particular objects of a KB (e.g. classes) should be connected to each other via particular relations (e.g. exclusion relations) is a negated or positive way (i.e. with or without a negation on these relations). Yet, this article shows that this kind of specification is very useful and often easy to comply with.

Section 2: the general approach.

- Section 2.1 starts the description of this above cited ontology – and introduces the approach – via a description of the parameters of C* and its restrictions. For genericity purposes, C* can exploit contextualizing meta-statements for taking into account such constraints as well as modalities and negations. This also allows the generalization of existing KB design recommendations to make them easier to comply with: relations following the advocated relations may also be negated or contextualized. This article does not advocate any particular KB design recommendations, it identifies interesting features for intrinsic completeness and a way to allow KB users to exploit these features and combine them.

- Section 2.2 provides examples and formal definitions for kinds of specifications which are simple but already generally more powerful than existing intrinsic completeness measures (which, in addition, unlike here, are hard-coded).

- Section 2.3 discusses the genericity of the approach with respect to KRLs and inference engines, while Section 2.5 compares this general approach with some other approaches or works. Presenting examples before these two sections help better understand these two sections and avoids making them over-general. Similarly, if the above research questions or statements of the problems still seem abstract, the examples instantiate these points and avoid the need for a separate section for them.

- Section 2.4 presents advantages of two very general kinds of intrinsic completeness introduced in Section 2.2: i) one here called “existential completeness” which underlies most other works related to intrinsic completeness measures, and ii) an original and more powerful one here called “universal completeness”. Although this article does not advocate any particular intrinsic completeness check, Section 2.4 shows that universal completeness not only maximizes the checking of the specified relation types but, from some viewpoints, is relevant to use (as a better KB quality measure) whenever existential completeness is.

- The proposed approach is supported by a Web-accessible tool at http://www.webkb.org/.../??? and, for this tool, Section 2.6 shows a simple user interface. This one gives an overview of the various kinds of parameters and useful kinds of values for these parameters. More values are proposed in Section 4 and Section 5.

- Section 2.7 proposes an ontology of operators, common criteria or best practices related to intrinsic completeness. This ontology is a way to complete the comparisons of Section 2.5 and the overview and inter-relations of Section 2.6.

- Section 2.8 evaluates the general approach with respect to subtype or exclusion relations in some foundational ontologies.

Section 3: implementation in SPARQL+OWL. This section shows how OWL and SPARQL [w3c, 2013a] (or SHACL [w3c, 2017]) can be used to i) implement CN–, CN and C% for evaluating intrinsic completeness via strict subtype relations, exclusion relations and equivalence relations, and ii) efficiently build such a KB. Section 4 and Section 5 reuse the framework for proposing SPARQL+OWL queries that implement more complex specifications.

Section 4: ontology and implementations of notions useful for the 3rd parameter of C*. This section generalizes Section 2.6 for the specification of useful constraints on relations between particular objects in the KB (e.g., regarding “genus and differentia” structures), once the source objects and the types of relations from them have been specified.

Section 5: ontology and exploitation of relation types useful for the 2nd parameter of C*. This section generalizes Section 2.6 regarding the specification of transitive relations – especially, generalization, equivalence and exclusion relations – as well as exclusion relations and instanceOf relations. This section also presents the advantages of using such specifications for maximizing inferences and, more specifically, for search purposes as well as the detection of inconsistencies and redundancies.

2. General Approach: an Ontology-based Genericity Wrt.

Goals, Formalisms and Inference Engines

2.1. The Function C*, its Kinds of Parameters and Specializations;

Terminology and Conventions

C* and the kinds of parameters it requires.

Theoretically, a complex enough function – here named C* –

could implement all elsewhere implemented intrinsic completeness checks,

although its code might have to be often updated to handle new features.

Since the basic kinds of data used by C* can be typed and aggregated in many different ways,

C* could be defined in very different ways, using different kinds of parameters,

i.e. different signatures, even when using “named parameters” (alias

“keyword arguments”, as opposed to positional parameters).

For C* to be generic (including wrt. KRLs), C* must allow the use of only one parameter

– one logic formula or boolean test function –

fully describing which particular objects must have which particular relations to which

particular objects (or, equivalently, which particular relations must exist between which

particular objects).

As examples in later subsections illustrate, for readability and ease of use purposes,

this description of objects and relations that must exist

should also decomposable into more than one parameter, and two parameters that are themselves

sets seem sufficient.

In any case, C* has to be polymorphic: for each parameter, C* should accept different kinds of

objects. E.g., for an object selection parameter, C* should at least accept

i) a pre-determined set of objects, ii) a set of criteria to retrieve such objects in

the specified KB, and iii) a function or a query to make that retrieval.

In this article, to ease the readability and understanding of the proposed handy restrictions of C*,

positional parameters are used and the selected untyped signature of C* is

“(objSelection1, objSelection2, constraints, metric, nonCoreParameters)”.

The following points describe this list of parameters and their rationale.

For the reasons given in the introduction, since this list is also an informal

top-level ontology for some elements for intrinsic completeness, a constraint language may also

address the described notions and supports their representation within the range of its

expressiveness. E.g., in SHACL, objSelection1, objSelection2 and constraints

are respectively addressed via the relations sh:target, sh:property (along

with sh:path) and sh:constraints.

- Together, objSelection1 and objSelection2 specify

the set of objects and/or relations to be checked, i.e.

i) the set of objects from which particular relations are to be checked, and/or

ii) the set of particular relations to check, and possibly

iii) the set of objects that the destinations of the checked relations may be.

In the following examples below using the two parameters.

- objSelection1 specifies the set of objects to be checked in the evaluated KB.

E.g., the expression

PL_`∀x rdfs:Class(x)'can be used to specify in a classic Predicate Logic notation that this set is composed of every class in the KB. For readability purposes, this article also proposes and uses FS (“For Sets”), a set-builder (or list comprehension) notation.FS_{every owl:Thing}is equivalent to the previous PL expression, whileFS_{every owl:Thing}specifies a set composed of all the (named or anonymous) objects (types, individuals or statements) represented in the KB (it is therefore advisable to use expressions that are more constrained than this one). - objSelection2 specifies the types of relations from the objects referred to by

objSelection1. E.g. the expression

“

FS_{rdfs:subClassOf, owl:equivalentClass}” means that subClassOf relations and equivalentClass relations from those objects should be checked. - The set of possible destination objects for these relations is not specified: by default, any destination is allowed.

- objSelection1 specifies the set of objects to be checked in the evaluated KB.

E.g., the expression

- The 3rd parameter specifies constraints that the “objects and/or relations selected via the first two parameters” should comply with. Example of constraint: for each selected object and selected relation type, there should be at least one relation of that type from this object.

- The 4th parameter specifies the metric to be used for reporting how many – or relatively how many – of the “objects and/or relations selected via the first two parameters” comply with the “constraints specified via the 3rd parameter”. Examples of metrics and metric names are: i) “N_obj”, the number of compliant selected source objects, ii) “N_rel”, the number of compliant relations from or to the selected objects, iii) “L_obj–”, the list of non compliant source objects, iv) “%_obj”, the ratio of N_obj to the number of selected objects, v) “%_rel”, the ratio of N_rel to the number of selected relations, and vi) “%%_obj”, the average of the percentage of compliant relations from or to each of the selected objects. More complex metrics can be used, such as those of the kinds described in [Hartmann et al., 2005] (e.g. “precision and recall” based ones) and [Ning & Shihan, 2006] (e.g. “Tree Balance” and “Concept Connectivity”) or those used in Ontometrics and Delta.

- The 5th parameter specifies objects that are not essential to the specification of an intrinsic completeness, e.g. parameters about how to store or display results and error messages. Hence, this parameter is not mentioned anymore in this article.

To sum up, the above distinctions (<selections, constraints, metric>) and associated parameters seem to support the dispatching of the basic kinds of data required by C* into a complete set of exclusive categories for these basic kinds of data, i.e., into a partition for them. Thus, all the data can be dispatched without ambiguities about where to dispatch them. The above parameters can also be seen as a handy way to describe part of the model used in this article (a more common way to describe a model is to define tuples of objects).

CN, CN–, C% and C%% as handy restrictions of C*. CN, CN–, C% and C%% only have the first three parameters of C*. Using CN is like using C* with the N_obj metric as 4th parameter. Section 2.2 formally defines CN for some combinations of parameters. That formal definition can be adapted for other combinations of parameters. CN– is like C* with the L_obj– metric (this one is more useful during KB building than when comparing KBs). C% is C* with the %_obj metric, while C%% is C* with the %%_obj metric. This article provides many examples of calls to C% and CN, and thus of how C* functions can be used. C%% is also used in Section 2.8 for analyzing and comparing some top-level ontologies.

Taking into account (or not) negations and, more generally, contexts. In this article, i) a statement is a relation or a non-empty set of relations, and ii) a meta-statement is a statement that is – or can be translated into – a relation stating things about a(n inner) statement. A negated statement can be seen as – or represented via, or converted into – a statement using a “not” relation expressing a “not” operator. A meta-statement that modifies the truth status of a statement – e.g., via a relation expressing a negation, a modality, a fuzzy logic coefficient or that the inner statement is true only at a particular time or place or according to a particular person – is in this article called a contextualizing statement (alias, context) for its inner statement, the contextualized statement. Thus, a relation that changes the truth status of a statement is a contextualizing relation (e.g., a “not” relation, a modality relation to a “necessarily not” value, a probability coefficient of 0%; this article defines negation as a particular contextualization for simplifying several of its formal or informal expressions). A statement is either positive (i.e. without a meta-statement, or with a meta-statement that simply annotates it instead of contextualizing it), negative (alias, negated), or contextualized but not negated. With C* as above described, if more than one parameter is used, it is the third one that specifies the kinds of contexts that the checked relations may have or should have. Some examples in the next subsection illustrate formalizations of this taking into account of contexts, and its advantages, especially for generalizing KB design recommendations.

Rationale of the used terminology. In some KR related terminologies, unlike in this article, the word “relation” is only used for referring to a relationship between real-world entities while other words are used for referring to the representations of such relations, e.g. “predicate” in Predicate logics, “property” in RDF and some knowledge graph formalisms [Kejriwal, Knoblock & Szekely, 2021], or “edge” in another [Hogan et al, 2021]. In this article, the words “relation”, “types”, “statements”, “meta-statements” and “contexts” have the meanings given in the introduction because i) these are common meanings in KRLs in KRLs, e.g. in Conceptual Graphs [Sowa, 2000], and ii) these words are more intuitive, general (hence not tied to a particular formalism) and easy-to-use (e.g., the words "from" and "to" often have to be used in this article and associating them to the word “property” seems awkward). Thus, (KR) “objects” are either types, individuals and statements, and a type is either a class or a relation type.

Conventions and helper ontologies.

Identifiers for relation types have a lowercase initial

while other object identifiers have an uppercase initial.

“OWL” refers to OWL-2 [w3c, 2012a].

“RDFS” refers to RDFS 1.1 [w3c, 2014a].

OWL types are prefixed by “owl:”,

and RDFS types by “rdfs:”.

The other types used in this article are declared or defined in

the following two ontologies.

- One of them is named “Sub” [Martin, 2019]

(a good part of it is about subtypes, subparts and similar relations;

this ontology has over 200 types).

This does not mean that Sub needs to be read for understanding this article; it just means

that all the types used in this article are defined and organized within existing ontologies.

E.g., Sub includes

sub:owl2_implication, the most general type of implication that an OWL-2 inference engine can exploit or implement. The “sub:” prefix abbreviates the URL of Sub. In accordance with Section 2.3 (“Genericity Wrt. Formalisms and Inference Engines”), “=>” and symbols derived from it are not prefixed in the examples and definitions below. Two statements or two non-empty types are in exclusion if they cannot have a shared specialization or instance, i.e., if having one is considered an error. E.g.,owl:disjointWithis a type of exclusion relation between two classes. - The other ontology is named “IC”

(for Intrinsic Completeness) [Martin, 2022 ??]. E.g., IC includes

“intrinsic completeness checking cardinality types” such as

ic:Every-object_to_some-objectandic:Every-object_to_every-objectwhich are explained in the next subsection. IC also includes the declaration of the type for C* and its subtypes used in this article, e.g.ic:C%andic:CN. In this article, for clarity purposes, these function types are not prefixed by “ic:”. Like Sub, IC does not need to be read for understanding this article.

2.2. Examples and Definitions of Existential Or Universal Completenesses

A very simple example: specifying that every class in a KB should have a label, a comment and

a superclass.

Since every class can have a superclass (for example since rdfs:Class is a subclass of

itself), performing such a check can be a legitimate KB design recommendation.

Whether it is a best practice is not relevant here: this article does

not advocate any particular intrinsic completeness check, it identifies interesting features for

intrinsic completeness and a way to allow KB users to exploit these features and combine them.

Given the above cited conventions and descriptions of C*, here are some ways to specify this

check using C% and various KRLs.

- With Predicate Logic (extended with the possibility to use “

∈Kb” for referring to the objects – types, individuals or statements – represented in a KB identified asKb):C%( PL_` ∀c∈Kb ∃label∈Kb ∃comment∈Kb ∃superclass∈Kb rdfs:label(c,label) ∧ rdfs:comment(c,comment) ∧ rdfs:subClassOf(c,superclass) ’ ). This function call gives the percentage of classes having the specified relations. This is also a specification: the ideal result should be 100%. If an object use relations specializing the mandated relations – e.g., by using subtypes of the mandated relation types – this object is still counted as complying with the specification. A statement that is in a parameter is not asserted in the KB: like a constraint, the inference engine cannot use it for deducing other formulas and adding them to the KB. However, this statement is written with a KRL, not a constraint language. - Even for this very simple example, using Predicate logic (PL) may seem a bit cumbersome.

With some other languages, e.g. RDF+OWL/Turtle, i.e. the RDF+OWL model with a Turtle notation,

the specification would be more cumbersome but C* and derived functions ideally should or

could use such languages too when they are expressive enough for representing the wanted

specification.

E.g., with RDF+OWL/Turtle, the previous specification could be:

C%( RDF+OWL/Turtle_` [a owl:Class; rdfs:subClassOf [ owl:intersectionOf ( [a owl:Restriction; owl:onProperty rdfs:label; owl:minCardinality 1] [a owl:Restriction; owl:onProperty rdfs:comment; owl:minCardinality 1] [a owl:Restriction; owl:onProperty rdfs:subClassOf; owl:minCardinality 1] ) ] ] ’ ).As with PL above, RDF+OWL/Turtle is here used for expressing a constraint but not a regular stand-alone one. Here the corresponding stand-alone constraint in SHACL-core:sub:Shape_for_a_class_to_have_a_least_a_label_and_a_comment_and_a_subclass a sh:NodeShape ; sh:targetClass owl:Class ; sh:property [ sh:path rdfs:label ; sh:minCount 1 ; ] sh:property [ sh:path rdfs:comment ; sh:minCount 1 ; ] sh:property [ sh:path rdfs:subClassOf; sh:minCount 1 ; ] . - To cope with the increasing complexity of examples below, this article proposes the use of

several parameters and, within them,

i) FS, a Set-builder notation,

and ii) types

with a definition that C* is supposed to interpret or that is hard-coded in C*.

/*pm: this replaces "predefined constants" */

With them and in accordance with the descriptions of parameters given in Section 2.1,

the previous specification can also be written as:

C%( FS_{every rdfs:Class}, FS_{rdfs:label, rdfs:comment, rdfs:subClassOf}, FS_{ic:Every-object_to_some-object} ). The type ic:Every-object_to_some-object indicates that every object specified via the 1st parameter should have some (i.e., at least one) relation of typerdfs:labeland a (some) relation of typerdfs:commentand a relation of typerdfs:subClassOf. For an exact translation of the above Predicate Logic version, the typeic:Only_positive-relations_may_be_checkedshould also be specified to indicate that negated or contextualized relations of the three above cited relation types are not taken into account, i.e., checked. However, dropping this restriction is actually an advantage since it allows the taking into account of such relations even if they are contextualized. E.g., a label relation may be contextualized to state that a particular person gave a particular label to a particular class for a particular time (such representations may be easier to handle than searching and managing various versions of a KB). Similarly, in the case that some subtype relations are used for the categorization of animal species, some categorizations may be associated to some authors and time periods.

In this article, all sets are expressed in FS and hence, from now on,

“

Definition of CN for basic existential completeness, with FS parameters and

default values.

Here, “basic” means without taking contexts into account.

In this article, a completeness specification that uses Definition of CN for existential completeness,

with FS parameters, default values, and taking contexts into account.

With the same assumptions as for the previous definition but without the restriction

This formula – and the other ones given in this article –

can easily be adapted for non-binary relations.

Definition of C% wrt. CN. C% divides the result of CN by the number of evaluated objects.

With the previous example, since the 1st parameter specifies the set of evaluated objects,

using C% instead of CN means dividing this result of CN by the number of objects of

type Definition of CN– wrt. the PL formula for CN.

CN– returns the list of objects for which

the PL formula for CN (i.e. Formula 1 in the previous example).

Simple example of universal completeness: specifying

that every class in a KB should be explicitly represented as exclusive or non-exclusive with

every other class in the KB.

Some advantages of such a specification or of derived ones are summarized in

Section 2.4, along with reasons why, at least from some viewpoints, such specifications

are always possible to comply with.

In this example, the goal is to specify that every pair of classes should be connected by an

inferable or directly asserted, negated or not, relation of type Definition of CN for universal completeness, with FS parameters.

With the same assumptions as for Formula 2, calling Specification of exactly which contextualizing relation types should be taken into account.

The IC ontology provides types (with hopefully intuitive names) for expressing

particular constraint parameters for C*.

Instead of such types, logical formulas should also be accepted by the C* functions for their

users to be able to specify variants of such constraints when they wish to.

Regarding contexts, this means that the users should be able to specify the

contextualizing relation types that should be taken into account.

To that end, IC provides the relation type

Mandatory contextualizing relations can be similarly specified.

Specification of the types of

mandatory contextualizing relations.

By using

Completeness of the destinations of each relation of particular types (possibly in addition to

the existential or universal completeness wrt. relations of these types).

Here are two examples for subclass relations.

Section 5.2 shows how such specifications can be

used with partOf relations instead of subclassOf relations.

More precisely, Section 5.2 includes definitions and an example showing how

Independence from particular logics and inference engines.

For genericity purposes, the approach presented in this article is purposefully not

related to a particular logic, KRL, inference engine or strategy.

To that end, the explanations in this article refer to relations that are directly asserted

or are “inferable” by “the used inference engine”, and the

Predicate Logics formulas used for function definition purposes use the

“

To conclude, although the results of the function depend on the selected inference engine,

it can be said that the approach itself is independent of a particular inference engine.

This kind of genericity is an advantage and, at least in this article, there would be

no point in restricting the approach to a particular logic.

The approach has no predefined required expressiveness: the

expressiveness required to check some KRs is at most the expressiveness of these KRs.

In the previous subsection, the formulas 1, 2 and 3 use a slight extension

to classic Predicate Logics and are also second-order formulas.

However, these formulas are for definitions and explanations purposes.

They do not imply that the proposed approach requires contexts or a second-order logic.

There are several reasons for this. Universal completeness wrt. generalization, equivalence and exclusion relations between classes.

The example specification of this subsection is The counterpart of this specification with existential completeness instead of with

universal completeness, i.e. with

Building a KB complying with a universal completeness specification

does not necessarily involve much extra work.

At least for the previous example specification, building a KB

complying with it does not require entering much more lines than when not complying with it

if the following two conditions are met (as can most often be the case).

Possibility and relevancy of complying with a universal completeness

specification when complying with its existential completeness counterpart is relevant.

Here, different uses of ontologies – and then viewpoints on them –

must be distinguished:

Three advantages of the above universal completeness specification example, at least from the

formal GKS viewpoint. These advantages are related to the fact that,

in a KB complying with this specification, the number of relations with the specified relation

types is, in the above cited sense, maximal. This above universal completeness specification example

– and its advantages – can be generalized to all types:

/* Reminder: texts in “olive” color are not planned to

be included into a journal article version because some reviewers may find these texts too complex.

This is why, like this paragraph, the last sentence of the previous paragraph refers to

Section 5.2.4. */

Some advantages of existential/universal completeness for checking

software code or the organization of software libraries.

Some software checking approaches exploit

relations between software objects (such as functions, instructions and variables), e.g.

partOf relations, generalization relations and input/output relations.

Such relations are stored into graphs (often called “Program Dependence Graphs”

[Hammer & Snelting, 2009]

[Zhioua, 2017]) and may for example be created

by automatic extraction from software code (e.g., as in Information Flow Control techniques) or

by models in approaches based on model-driven engineering.

Using completeness checking functions on such graphs seems interesting.

Here are two examples. Proposal of default values for completeness specifications

and list of the ones used in this article.

The examples in this article show that some kinds of completeness specifications are more powerful or

seem more often useful than others, hence more interesting to choose as default values.

E.g., as above shown, at least from a formal GKS viewpoint,

a specification with The introduction distinguishes intrinsic completeness measures from

extrinsic completeness ones or other KB quality measures.

Compared to other approaches – e.g. classic constraint-languages, the use of axioms and

predefined measures –

the introduction also highlights the original features of the presented general approach:

it exploits the start of ontology about intrinsic completeness centered around some generic

functions which can exploit other types defined in this ontology and the implication operator

of the inference engine selected by the user. Thus, the approach has the originality of

allowing its users (the users of its functions) to create quite expressive and concise intrinsic

completeness specifications tailored to their KB evaluation needs, while writing

parameters with the KRL they wish to use.

Conversely, this ontology – and hence approach – could be reused in

some constraint-languages or query languages

to allow their users to write more concise and expressive intrinsic completeness

specifications, and then check a KB wrt. these specifications.

The next subsection (section 2.6) provides an overview of the content of the ontology.

Section 2.7 shows how the ontology categorizes types that permit the representation of

common criteria or best practices that can be related to intrinsic completeness, and thus

is another kind of comparison of the present work with other ones.

Since the other existing KB quality measures or checking tools are predefined, the

rest of this subsection shows how those that can be related to intrinsic completeness

can be represented with the introduced approach. This is also a kind of comparison with these

predefined measures.

Specification of most of the checks made by Oops!

The ontology checking tool Oops!

[Poveda-Villalón et al., 2014]) proposes a list of

41 “common pitfalls”. These semantic or lexical errors are grouped according to

four non-exclusive “ontology quality dimensions: Modelling issues, Human understanding,

Logical consistency and Real world representation”.

Oops! can automatically check 33 of them. Out of these 33, it seems that

i) 16 are about missing values or relations

which could be prevented by specifications represented via OWL definitions or

SHACL (Shapes Constraint Language) [Knublauch & Kontokostas, 2017], and

ii) 9 are inconsistency problems which could often be prevented by specifications

represented via OWL definitions (and an inference engine exploiting them).

The 8 remaining problems are more lexical (and related to names or annotations) or

related to i) the non-existence of files or objects within them, or

ii) normalization (“P25: Defining a relationship as inverse to itself”).

The 16 pitfalls about missing values or relations can be detected via

intrinsic completeness specifications. (These problems may also be detected via

OWL definitions, which seems preferable since definitions are knowledge representations

which are important not just for checking purposes). E.g.: “Coverage of a class” in the sense used in [Karanth & Mahesh, 2016]. In [Karanth & Mahesh, 2016] (unlike in [Duan et al., 2011]), the “coverage” of a class in a KB is the ratio of

i) the number of instances of this class, to

ii) the number of instances (in the KB).

For a class identified by

“Domain/range coverage of a property” in the sense used in [Karanth & Mahesh, 2016]. In [Karanth & Mahesh, 2016], the “domain coverage”

of a property Comparison to the measure named “coverage” in [Duan et al., 2011]

(this paragraph reuses some parts of Section 2.4.3).

In [Duan et al., 2011], the “coverage of a class

within a dataset” is with respect to the “properties that belong to the class”.

For each of these properties (binary relations from the class), this coverage is (very informally)

the ratio of

i) the number of occurrences of this property in (all) the instances of this class, to

ii) the product of “the number of properties in this class” and

“the number of instances of this class (in the evaluated dataset)”.

This coverage was designed to return 100% when all instances of a class have all the

“properties that belong to the class” (to use the terminology of

[Duan et al., 2011], one more often associated to

some frame-based KRLs than to more expressive KRLs).

To represent and generalize this last expression, C* and its derived functions can exploit the

special variable (or keyword) “$each_applicable_relation” in their 2nd

parameter. This variable specifies that “each relation type (declared in the KB or

KBs it imports) which can be used (e.g., given its definition or signature) should be used

whenever possible, directly or via a subtype”.

E.g., for a class identified by Conclusion wrt. KB evaluation measures.

Current KB evaluation measures that can be categorized as intrinsic completeness measures have

far fewer parameters and do not exploit contextualizations.

Thus, they do not answer the research questions of this article and, unlike KB design recommendations, can rarely be extended to exploit aboutness.

Many of such measures also rely on statistics that are not simple

ratios between comparable quantities (quantities of the same nature), and thus are

often more difficult to interpret.

All these points are illustrated near the end of

Section 2.2 (Comparison to the measure named “coverage” in

[Duan et al., 2011]).

In [Zaveri et al., 2016] (and [Wilson et al., 2022]),

“coverage” is an extrinsic completeness measure since it refers to the

number of objects and properties that are necessary in the evaluated KB for it to be

“appropriate for a particular task“.

Formalizing, Categorizing and Generalizing KB Design Recommendations

/* This section is to be partially rewritten based on the parts below */

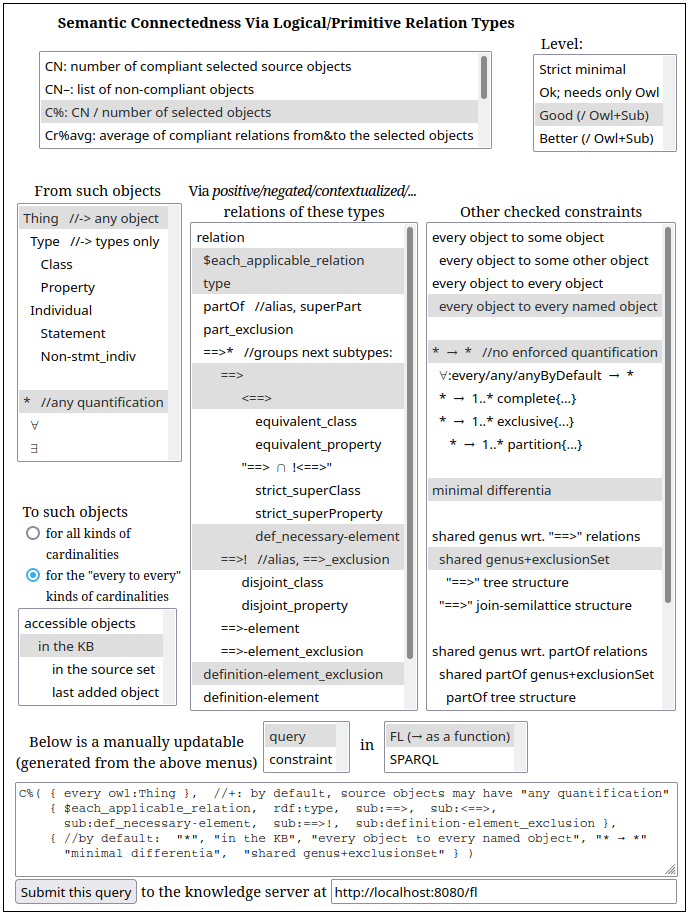

Quick explanation of the interface.

Figure 1 shows a simple graphic user interface for

i) helping people build parameters for some functions like C%,

ii) generating a query (function or SPARQL query) or, in some cases, a SHACL constraint, and

iii) calling a KB server (e.g. a SPARQL endpoint) with the query or constraint.

This server displays the results of the execution of the query or of the adding of the constraint.

Since KB servers rarely accept the uploading of a KB into them, no menu is proposed for that in

this interface.

For functions, this interface was tested with WebKB-2;

for SPARQL+OWL or SHACL, a local Corese server was used.

Each of the points below comments on one menu of the interface.

These points are only meant to give an overview and general ideas about what can be achieved.

In these menus, the indentations always represent specializations.

Parameters in the "To such objects" menus of the simple user interface shown in

Figure 1.

The options of the last two points can be seen as specifying a selective checking of

several KBs. They can also be seen as specifying the checking of a KB with respect to

other KBs.

However, unlike with external completeness measures, these options do not mean checking that the KB

includes some particular content from some particular external sources.

/* This section is to be written - or removed - based on the parts below */

Intrinsic completeness of a unique object (instead of a KB).

This notion might seem counter-intuitive at first but is

naturally derived by

restricting the 1st parameter set of C% to only one particular object,

e.g. by explicitly giving its identifier

(the simple selection menus of Figure 1 do not support the use of such an

identification).

CNΔ (relation usefulness).

/* This section is to be written (or removed) based on the parts below */

Box 1 shows that common criteria or best practices (BPs) for the quality of KBs

can often be categorized as intrinsic completeness ones.

For each such criteria or BP, the types of the exploited relations depend on the

used particular implementation, and the ontologies they come from but.

However, assuming that these ontologies are aligned, a specialization hierarchy of these

implementations could be derived.

Each implementation would also depends on the used underlying approach

– e.g., the one explored in this article –

and the used implementation language.

Explanations: /* This section is to be fully rewritten based on various top-level ontology evaluations

(such as the one described below) and the use of C%% too for these evaluations.

A table will compare the results for the various ontologies. */

Evaluation of a well known foundational ontology

(this paragraph is a summary of Section 3.1).

To illustrate one experimental implementation and validation of this approach,

DOLCE+DnS Ultralite

(DUL) [Gangemi, 2019] – one of the most used foundational ontologies and one

represented in RDF+OWL – has been checked via

DOLCE+DnS Ultralite

(DUL) [Gangemi, 2019] is one of the best known – or most used –

foundational ontologies

and is fully represented in OWL.

This section reports and analyses some intrinsic completeness measures of a slight

extension of this ontology (DUL+D0, from the same author) and, more precisely,

its last version in April 2020

(D0 1.2 +

DUL 3.32;

OWL/Turtle versions of April 14th, 2019).

For understandability and analysis purposes, an

FL-based and modularized slight extension has also been made [Martin, 2020].

DUL+D0 has 84 classes and 112 relation types.

The classes have for uppermost type For DUL+D0, without making any assumption,

Given the names and informal definitions of the types in DUL+D0, it is clear

that all its subtype relations are meant to be strict. With that first interpretation

assumption, the result is 1/84 instead of 0%:

only However, another interesting and rather safe interpretation assumption for

A third and more risky interpretation assumption for Similarly, regarding relation types in DUL+D0,

Given the previous results, it did not seem necessary to show the results of

an evaluation for another top-level ontology, nor for a general ontology such as DBpedia

where there are relatively few exclusion relations. Indeed, the DBpedia of April 2020

included only 27 direct exclusion relations, 20 of them from the class named "Person"

(this can be checked at http://live.dbpedia.org/sparql), while it included more than

5 million direct Rationale for checks via SPARQL or SHACL.

Nowadays, many KBs are accessible to Web users via a SPARQL endpoint and sometimes only this way.

Thus, for Web users that would like to check if a KB is sufficienty well-organized for

being reused or for warranting further tests via a full download of the KB (using a static

file or queries), issuing some SPARQL queries is interesting.

Some KB developers also use SPARQL queries for some checks

– e.g. the one of ontology patterns, as in

[Sváb-Zamazal1, Scharffe & Svátek,

2009] – for example because the KRL they use is not powerful enough to represent

these patterns in a way that support their checking or because the inference engine they use

would not be powerful enough to exploit these ways.

To support some checks, instead of using queries or adding knowledge to the KB, constraints

can be represented (into the KB or, if a different language is used, in a separate file).

For constraint, the W3C proposes the use of SHACL

(Shapes Constraint Language) [Knublauch & Kontokostas, 2017].

Experimental validation via Corese.

The SPARQL queries or operations – and SCHACL constraints – proposed in this

section have been validated experimentally using

Corese [Corby & Faron-Zucker, 2015],

a tool which includes an OWL-RL inference engine,

a SPARQL (1.1) engine, and

a SHACL validator.

Assumption for all SPARQL queries in this article: only positive or negated relations are

taken into account. In the rest of this article, the constraint “ Rationale for such queries.

Section 4 shows the interest that a KB has for inconsistencies

and redundancies detection, search purposes and, more generally, inference purposes,

if this KB is “universally complete wrt. implication,

generalization, equivalence and exclusion relations” (i.e., if, for any pair of objects,

the used inference engine knows whether one object has implication, generalization, equivalence or

exclusion relations to the other, or the conditions when such relations exist, or if

they cannot exist; the expresssion “universally complete wrt. some relations”

was informally introduced by Section 2.2).

The present section shows how such an intrinsic completeness can be a checked using SPARQL, with

the following restriction: only “relations between types” from OWL-RL|QL (i.e., from

OWL-RL or OWL-QL) are checked

and hence only “universal completeness of types wrt. generalization, equivalence and

exclusion relations”.

Indeed,

i) OWL is one de facto standard KRL model and, when an inference engine is

used together with a SPARQL engine, it is most often an OWL engine,

ii) OWL engines are often restricted to a particular OWL profile, typically

OWL-RL, OWL-QL or OWL-EL,

iii) OWL only provides generalisation types between types, not between statements,

iv) allows one to express that a type is not subtype of another only via OWL-Full

(which is not supported by current OWL engines) or by using a disjointness

relation (i.e., by stating that a types cannot be subtype of another), and

v) OWL-EL, the third common profile of OWL(2), does not support disjoint properties.

Query 1: implementation of

Thus, this query checks all the ways a relation of type Query 2: implementation of

Adaptations of the two previous queries for the

“every object to some other object” and

“every object to some object” kind of cardinalities.

Below is the counterpart of Query 1 for the first of these two kinds of cardinalities

– and, with the last “#” removed, the counterpart of

Query 2 for the first kind.

This implementation

To obtain the counterpart of Query 1 for the second kinds of cardinalities, the

“ Query 3: implementation of Adaptation of Query 3 for the

“every object to some other object” cardinalities.

Adding or lifting restrictions. Queries for CN can be made more restrictive by

adding more tests but, as illustrated with Query 2, more tests relax queries for CN–.

E.g, more relations or more precise ones may be checked, and the function Counterparts of the previous queries for the use of CN and C%

(instead of CN–, with the same parameters).

Shortcuts for combinations of OWL types. This subsection illustrates some of the many

type definitions (in the sense given in Section 2.1.3)

made in the Sub ontology [Martin, 2019]

i) to support the writing of complex specifications, and more importantly,

ii) to ease the development of KBs complying with these specifications, especially

those leading to types that are “universally complete wrt. generalization, equivalence

and exclusion relations”.

Using more complex queries when less powerful inference engines are used.

These type definitions are made using OWL but many of them use

i) Generalization of OWL types for relations from classes.

In this article, Using (in-)complete sets of (non-)exclusive subtypes.

It seems that an efficient way to build a KB where types are

“universally complete wrt. generalization, equivalence and exclusion relations” is,

when relating each type to one or several direct subtypes of it, to use

i) a subtype partition, i.e. a disjoint union of subtypes equivalent to the

subtyped type (that is, a complete set of disjoint subtypes), and/or

ii) “incomplete sets of disjoint subtypes”, and/or

iii) “(in-)complete sets of subtypes that are not disjoint but still

non-equivalent (hence different) and not relatable by Properties easing the use of (in-)complete sets of (non-)exclusive subtypes, and

non-exclusion relations between types of different sets.

Below is the list of properties that are defined in Sub (using OWL and, generally, SPARQL

update operations too) to help representing all the relations mentioned in the

previous paragraph, in a concise way, hence in a way that

i) is not too cumbersome and prone to errors, and

ii) makes the representations more readable and understandable by the readers once the

properties are known to these readers. Here is what this example representation would be using only OWL properties and

Here is what the example representation would be using only OWL properties, not

mentioning the This last example representation is both less precise and still visually less structured than the

first previous one. When an ontology has many relations, any kind of visual structure is important

to help design it or understand it.

For an OWL engine, despite the use of SPARQL definitions and for the reasons

given in the third paragraph of this subsection (or similar reasons), using these last four

properties is only equivalent to the use of Definition of In OWL-Full, the use of Adaptation of queries in the previous subsection for them to use only one relation type.

From any object, checking various relations (of different types) to every/some object

is equivalent to checking one relation that generalizes these previous relations.

The Sub ontology provides types for such generalizing relations since these types ease the writing

of queries.

However, these types have to be defined using These general types may also be useful for constraint-based checks, as illustrated in the

next subsection.

For checking 100% compliances.

Like OWL, SHACL (Shapes Constraint Language)

[Knublauch & Kontokostas, 2017] is a language

– or an ontology for a language – proposed by the W3C.

Unlike OWL, SHACL supports constraints on how things should be represented within an RDF KB.

SHACL can be decomposed into

i) SHACL-core, which cannot reuse SPARQL queries, and

ii) SHACL-SPARQL, which can reuse them.

CN and C% are not boolean functions and hence a full implementation of their

use cannot be obtained via SHACL. However, SHACL can be used to check that a KB is 100%

compliant with particular specifications expressed via C%.

SHACL counterpart of Query 2.

The “every object to every object” cardinalities cannot be checked via SHACL-core

but here is the C% related counterpart of Query 2

(Section 3.1.1) in SHACL-SPARQL.

SHACL counterpart of Query 2 for the

“every object to some object” cardinalities. Here, SHACL-core can be used.

Use of alternative languages.

Other languages could be similarly used for implementing intrinsic completeness evaluations,

e.g. SPIN

(SParql Inferencing Notation) [w3c, 2011],

a W3C language ontology that enables i) the storage of SPARQL queries in RDF and,

ii) via special relations such as

So far, in all the presented uses of CN and C%, their 2nd parameter only included

particular named types. With uppermost types such as

However, one may also want to check that each particular property associated to a class

– via relation signatures or the definitions of this class –

is used whenever possible (hence with each instance of this class) but in a relevant way,

e.g. using negations or contextualized statements when unconditional affirmative statements

would be false.

Such checking cannot be specified via a few named types in the 2nd parameter of CN and C%.

This checking may be enforced using constraints (like those in database systems or those

in knowledge acquisition or modelling tools). However, with many constraint languages,

e.g. SHACL, this checking would have to be specified class by class, or property by property,

since the language would not allow quantifying over classes and properties:

“for each class, for each property associated to this class”.

Instead, special variables (or keywords) such as

“ A variant of the previous variable may be used for taking into account only definitions.

With this variant, every evaluated object must have all the relations prescribed by the

definitions associated with the types of the object.

Unlike with this variant, when using A variant may also be used for only taking into account all definitions of

necessary relations. It should be noted that many relations cannot be defined as

necessary, e.g., Here are SPARQL queries that respectively exploit

i) The KB evaluation measure closest to C% seems to be the one described in [Duan et al., 2011].

The authors call it “coverage of a class (or type) within a dataset” (the

authors use the word “type” but a class is actually referred to).

This coverage is with respect to the “properties that belong to the

class”. For each class and each of its properties, this coverage is the ratio of

i) the number of occurrences of this property from the instances of this class, to

ii) the number of properties of this class, and (i.e. also divided by)

iii) the number of instances of this class (in the evaluated dataset).

Hence, this coverage returns 100% when all instances of a class have

all the “properties that belong to the class (or type)” (this is the

terminology used in [Duan et al., 2011]).

Thus, this coverage metric is akin to intrinsic completeness measures.

Unlike CN or C%, it is restricted to

the case described in the previous paragraph and, at least according to the used descriptions

and terminology, does not take into account negations, modalities, contexts, relation signatures

or relations such as In [Zaveri et al., 2016], “coverage” refers to the number of objects and

properties that are necessary in the evaluated KB for it to be “appropriate for a

particular task”.

In [Karanth & Mahesh, 2016], the “coverage” of a class or property is the

ratio of i) the number of individuals of this class or using this property

(directly or via inferences), to ii) the number of individuals in the KB.

This last metric is not a intrinsic completeness measure since, for the given type,

“being of that type or using that type” is not a constraint or requirement.

Quantifiers for the first selection of objects and relations.

The definitions of CN and C% for the

“every object to every object” default cardinalities and

for the “every object to some object” cardinalities

have been given in exSection 2.3.

Figure 1 showed these two kinds of cardinalities

as options to be selected.

Via examples, Section 3.1 shows how

the second kind of cardinalities can be implemented in SPARQL and SHACL.

Section 5.2 also shows a SPARQL query for this second kind

of cardinalities.

All the other queries are for the default kind of cardinalities.

These two kinds are about object selection: given the 1st

parameter of CN and C%, i.e. the type for the source objects,

i) “which instances to check?” and,

ii) from these instances, and given the relation types in the 2nd parameter,

“which destination objects to check?”.

Other variations may be offered for this selection, e.g.

i) a type for the destination objects, and

ii) whether the source or destination objects should be named

(i.e. be named types or named individuals, as opposed to type expressions or blank nodes).

Furthermore, one may also want to consider objects which are reachable from the KB.

Indeed, a KB may reuse objects defined in other KBs and object identifiers may be URIs which

refer to KBs where more definitions on these objects can be found. This is abbreviated by

saying that these other KBs or definitions are reachable from the original KB.

Similarly, from this other KB, yet other KBs can be reached.

However, this notion cannot be implemented with the current features of SPARQL.

Nevertheless, below its “Class of the source objects” selector, Figure 1

shows some options based on this last notion and object naming.

Quantifiers for the second selection.

Whichever the cardinalities or variation used for this first selection, each

relation to check from or between the selected objects also has a source and a destination.

Thus, a second selection may be performed on their quantification:

the user may choose to accept any quantification (this is the default option) or

particular quantifiers for the source or the destination.

In Figure 1, “*” is used for referring to any

object quantification and thus “* -> *” does not impose any restriction on

the quantification of the source and destination of the relations to be evaluated.

The rest of this subsection progressively explains the specializations of

“* -> *” proposed in Figure 1.

Unquantified objects

– i.e. named types, named statements and named individuals –

are also considered to be universally and existentially quantified.

Since type definitions of the form “any (instance of) <Type> is a ...” (e.g.,

“any Cat is a Mammal” or “Cat rdfs:subClassOf Mammal”) are

particular kinds of universal statements, in Figure 1

i) the expression “ In Figure 1,

in addition to “* -> *”, more specialized options are

proposed and “ Representation of some meanings of alethic modalities in languages that

do not fully support such modalities.

When a set of statements fully satisfies a specification made via C%,

none of these statements has an unknown truth value: if they are neither unconditionally

false nor unconditionally true, their truth values or conditions for being true are still

specified, e.g. via modalities (of truth/beliefs/knowledge/...), contexts or fuzzy logic.

In linguistics or logics, alethic modalities indicate modalities of truth, in particular

the modalities of logical necessity, possibility or impossibility. There are first-order

logics compatible ways – and ad-hoc but OWL-compatible ways – of

expressing some of the semantics of these modalities.

Given the particular nature of these different kinds of statements,

selecting which kinds should be checked when evaluating a set of objects may be useful.

To improve the understandability of types,

as well as enabling more inferences, when defining a type,

a best practice (BP) is to specify

its similarities and differences with

i) each of its direct supertypes

(e.g., as in the genus & differentia design pattern), and

ii) each of its siblings for these supertypes.

[Bachimont, Isaac & Troncy, 2002]

advocates this BP and names it the “Differential Semantics” methodology

but does not define what a minimal differentia should be, nor generalize this

BP to all generalization relations, hence to all objects (types, individuals, statements).

For the automatic checking of the compliance of objects to this generalized BP,

i) Figure 1 proposes the option “minimal differentia”, and

ii) the expression "minimal differentia between two objects" is defined as

referring to a difference of at least one (inferred or not) relation in the

definitions of the compared objects: one more relation, one less or

one with a type or destination that is semantically different.

Furthermore, to check that an object is different from each of its generalizations,

a generalization relation between two objects does not count as a

“differing relation”.

More precisely, with the option “minimal differentia”,

each pair of objects which satisfies all the given requirements

– e.g., with the “every object to some object” cardinalities,

each pair of objects connected by at least one of the relation types

in the 2nd parameter –

should have the above defined “minimal differentia” too.

Thus, if Hence, using CN or C% with the above cited definition is a way to

generalize, formalize and check the compliance with the

“Differential Semantics” methodology.

Section 4.1 highlights that a KB

where hierarchies of objects can be used as

decision trees is interesting and that one way to achieve this is to use

at least one set of exclusive direct specializations when specializing an object.

Systematic differentia between objects is an added advantage for the exploitation of

such decision trees, for various purposes: knowledge categorization, alignment,

integration, search, etc.

Minimal differentia example. If the type SPARQL query. Here is an adaptation of Query 1 from

Section 3.1 to check the compliance of classes

with the above defined “minimal differentia” option. This adaptation is the

addition of one Besides highlighting some interests of using

at least one set of exclusive direct specializations whenever specializing an object,

Section 4.2 reminds that this is

an easy way to satisfy Figure 1 proposes a weaker and hence more general option: one with

which only the first constraint is checked, not the second.

It also proposes other specializations for this weaker option:

“ "==>" tree structure ” and

“ "==>" join-semilattice structure ”.

In the first case, all the specializations of an object

are in the same exclusion set.

In the second case, any two objects have a least upper bound.

Both structures have advantages for object matching and categorization.

Other cases, hence other structures, could be similarly specified, typically one for

the full lattice structure. This one is often used by automatic categorization methods

such as Formal Concept Analysis.

Figure 1 also shows that similar options can be proposed for

partOf hierarchies, hence not just for “ Overview. This subsection first defines “ Definition of “ Here are some consequences:

Definition of “ Comparability and uncomparability (via “ Definition of “ Definition of “ Definition of “ Definition of “ Definition of “ Let “ Figure 2 further illustrates the idea of the first point as well as an additional one

regarding the 2nd parameter: replacing some types by more precise ones in this parameter

leads to a specification that is more focused, hence more normative, but less generic.

The possible combinations of “ From the points made in the two previous paragraphs, it can be concluded that using

at least “ Section 2.2 gave

introductory examples about how the use of

subtypeOf relations – or negations for them, e.g. via

disjointWith or complementOf relations –

supports the detection or prevention of some incorrect uses of all such relations as well as

instanceOf relations.

The general cause of such incorrect uses is that some knowledge providers do not know

the full semantics of some particular types, either because they forgot this semantics or

because this semantics was never made explicit.

The following two-point list summarizes the analysis of [Martin, 2003] about the most

common causes of the 230 exclusion violations that were automatically detected after

some exclusion relation were added between some top-level categories of WordNet 1.3

(those which seemed exclusive given their names, the comments associated to them,

and those of their specializations).

What such violations mean in WordNet is debatable since it is not an ontology but

i) in the general case, they can at least be heuristics for bringing more precision

and structure when building a KB,

ii) most of these possible problems do not occur anymore in the current WordNet (3.1),

and

iii) the listed kinds of problems can occur in most ontologies.

Within or across KBs, hierarchies of types may be

at least partially redundant. This expression means that at least some types

can be derived from others or could be derived if

particular type definitions or transformation rules were added to the KB.

Implicitly redundant subtype hierarchies are those with

non-automatically detectable redundancies between these hierarchies.

One way to reduce such implicit redundancies,

and thus later make the hierarchies easier to merge (manually or automatically),

is to cross-relate their types by subtypeOf relations or equivalence relations (and, as the next

paragraph shows, negations for them), whenever these relations are relevant.